- makeITcircular 2024 content launched – Part of Maker Faire Rome 2024Posted 2 weeks ago

- Application For Maker Faire Rome 2024: Deadline June 20thPosted 2 months ago

- Building a 3D Digital Clock with ArduinoPosted 7 months ago

- Creating a controller for Minecraft with realistic body movements using ArduinoPosted 7 months ago

- Snowflake with ArduinoPosted 8 months ago

- Holographic Christmas TreePosted 8 months ago

- Segstick: Build Your Own Self-Balancing Vehicle in Just 2 Days with ArduinoPosted 8 months ago

- ZSWatch: An Open-Source Smartwatch Project Based on the Zephyr Operating SystemPosted 9 months ago

- What is IoT and which devices to usePosted 9 months ago

- Maker Faire Rome Unveils Thrilling “Padel Smash Future” Pavilion for Sports EnthusiastsPosted 10 months ago

How to use Deep Learning when you have Limited Data

One common barrier for using deep learning to solve problems is the amount of data needed to train a model. The requirement of large data arises because of a large number of parameters in the model that machines have to learn.

There are a lot of examples like Language Translation, playing Strategy Games and Self-Driving Cars which required millions of data.

nanonets.ai help builds Machine Learning with fewer data.

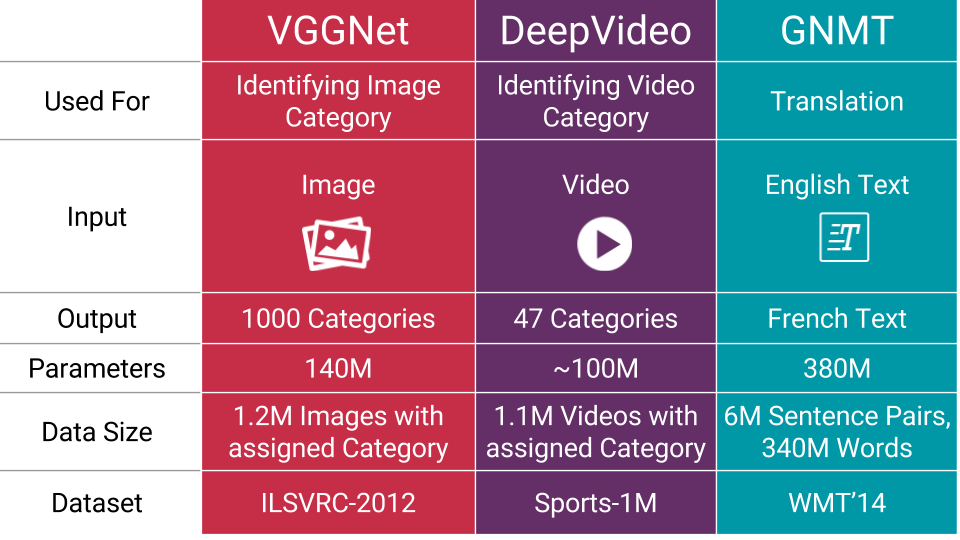

A few examples of number of parameters in these recent models are:

Recent advances in Deep Learning helped us build rich representations of data that are transferable across tasks. Using this technology we pre-train models on extremely large datasets that contain varied information. NanoNets are added to the existing model then trained on your data to solve your specific problem. Since NanoNets are smaller than traditional networks they require much less data and time to build.