- makeITcircular 2024 content launched – Part of Maker Faire Rome 2024Posted 2 weeks ago

- Application For Maker Faire Rome 2024: Deadline June 20thPosted 2 months ago

- Building a 3D Digital Clock with ArduinoPosted 7 months ago

- Creating a controller for Minecraft with realistic body movements using ArduinoPosted 7 months ago

- Snowflake with ArduinoPosted 8 months ago

- Holographic Christmas TreePosted 8 months ago

- Segstick: Build Your Own Self-Balancing Vehicle in Just 2 Days with ArduinoPosted 8 months ago

- ZSWatch: An Open-Source Smartwatch Project Based on the Zephyr Operating SystemPosted 9 months ago

- What is IoT and which devices to usePosted 9 months ago

- Maker Faire Rome Unveils Thrilling “Padel Smash Future” Pavilion for Sports EnthusiastsPosted 10 months ago

Ethoscope: the Open Source Platform for Real-Time, High-Throuput Analysis of Behaviour

Researchers have created a Raspberry Pi-powered robotic lab that detects and profiles the behaviour of thousands of fruit-flies in real-time.

The researchers, from Imperial College London, built the mini Pi-powered robotics lab to help scale up analyses of fruit flies, which have become popular proxy for scientists to study human genes and the wiring of the brain.

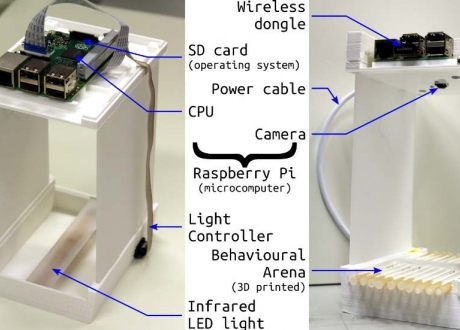

The Raspberry Pi-based ethoscope offers scientists a modular design that can be built with 3D-printed components or even LEGO bricks at a cost of €100 per ethoscope. Besides the Raspberry Pi, the ethoscope requires a HD camera and a 3D-printed box-type frame with various compartments. They’ve also provided designs and instructions for creating the frame with LEGO or folded cardboard.

“The ethoscopes used by the team are 3D printed, but they can be made out of folded card or paper and even LEGO bricks. The additional computer components – the Raspberry Pi and Arduino board – are cheap and easily accessible, and all software and construction specifications are freely available online.”

The Raspberry Pi and camera are located at the top for observing and recording a “behavioral arena” deck at the bottom, which is illuminated by an infrared LED light. They offer eight behavioral arena 3D print designs for different types of studies, such as sleep analysis, decision making, and feeding.

On the software side, they used a supervised machine learning algorithm to develop a tracking module and a real-time behavioral annotator that automatically labels different states of activity, such as walking, making micro-movements like eating or laying an egg, and not moving.

Each ethoscope is powered via USB and can be controlled from a PC through a web interface. The Raspberry Pi offloads data to PCs over wifi for analysis, which also ensures experiments aren’t constrained by storage capacity on the Raspberry Pi.